-

§ The solar eclipse was a serious spectacle. I’m glad I quickly learned that getting decent photos without a specialized camera rig is next to impossible. With that out of the way, I was able to enjoy it for what it was, in the moment.

It was cool to watch it in a crowd with thousands of other people but next time I would go out of my way to find somewhere secluded, out in nature, safe from the risk of anyone nearby playing Pink Floyd.

§ This whole celestial anomaly thing also meant I worked nine days in a row with a big busy three-day festival in the middle. Exhausting, but at least I won’t have to worry about it again for another 400 years.

I took Wednesday off to do not one but two of my favorite things: go to the DMV and file my taxes. Yeehaw.

§ The seeds have mostly all germinated. I want to try my hand at saving seeds from the most successful plants at the end of this year to plant again for the next growing season. This is, of course, the first step towards my ultimate goal of hybridizing plants.

§ We bought three more quails from a nearby homestead—our first time there.

Our existing four birds came from a combination of at-home incubation and getting live chicks shipped through the USPS, something our local post office apparently handles with some regularity.

The three new girls seem to be happy and healthy but we are going to keep them separated from the rest of the flock for a week as a precaution against bird flu.

§ It makes me happy to see that Charli XCX is continuing to push on the boundaries of radio pop. Her music might actually be getting More adventures over time while remaining solid club classics. I can’t wait for her new album.

§ Things are accelerating with the wedding coming up in July. I set up a website, we sent our invite design to the printers, and met with our reception venue’s event manager.

§ My office move to the third floor didn’t get past Apple Health unnoticed. I got a notification that my average daily flights of stairs climbed jumped from 42 to 77.

§ The back half of this week turned incessantly wet and windy but warm enough to take the trash out in the evening without immediately feeling chilled to my core—a sure sign of spring.

§ Links

- Daylight Saving Time is a perfect test for UI designers – Tasks that occur every six months are special. They aren’t frequent enough to warrant much prominence in an interface yet they must be intuitive enough to figure out without relying on memory (most people will forget) or a user manual (it will get lost).

- Robin Sloan: “It is physical establishments — storefronts and markets, cafes and restaurants — that make cities… worth inhabiting. Even the places you don’t frequent provide tremendous value to you, because they draw other people out, populating the sidewalks. They generate urban life in its fundamental unit, which is: the bustle.”

-

§ The solar eclipse is tomorrow. Work this week was all preparation punctuated by anxiously refreshing the weather forecast. All signs point to clouds tomorrow but hey, lets not dwell on that.

§ You know how last week I said I didn’t want to commit to reading any new books because I have so many unfinished books already? Well, I started—and finished!—Robin Sloan’s 2020 short story Annabel Scheme and the Adventure of the New Golden Gate.

Sloan’s writing has a certain lightness—an openness to imagination, a belief that all of the world’s mysteries can be solved though curiosity and adventure—that I find endearing. I loved Mr. Penumbra’s 24-Hour Bookstore for the same reason. I’m hopeful his new book will be a continuation of the theme.

§ Shōgun just keeps getting better. All rainy and tense with violence simmering just below all of the restrained formalities.

§ I’ve been watching just an inexcusable amount of Waldemar Januszczak recently. If you would like to join me, here is my suggestion: don’t look at the titles, just click on a video at random. The magic trick is that he is able to breathe life into the most mundane-seeming topics. You’ll suddenly find yourself intrigued by Dobson’s faces or fascinated by the inner lives of Gainsborough’s patrons.

§ I moved to a new office on the third floor. It is making it hard to stick to my policy of Always Take The Stairs but so far I’ve been holding strong.

§ Links

- Robot Slide Whistle Orchestrion

- Another instrument: the cristal baschet

-

§ Happy Easter

§ I started some groundcherry seeds again, after finding no success with them last year. The other big new experiment this year—other than jostaberry and garlic—is asparagus. I’m looking forward to hopefully having a perennial supply of the odd gangly vegetable. Coincidentally, this was the same week I started all of my seeds last year.

§ I’m beginning to think bay leaves might be a placebo or some elaborate culinary prank.

§ After watching Kenji’s videos for a long time, I finally bought a copy of his book. It made for a nice excuse to spend a bunch of time at the local Asian markets sourcing all of the necessary ingredients. I tried two of the recipes, sichuan-style green beans and gong bao ji ding, which tasted great but filled my kitchen with a noxious capsaicin-infused smoke. I quite literally pepper sprayed myself. I’ve already dog-eared ten more recipes I want to try as soon as possible.

§ I know 3 Body Problem got mixed reviews so I was reluctant to give it a chance. I’m glad I ultimately did. I think it was unequivocally good from start to finish. I would say that I’m interested in reading the book now but 1) I’ve accepted the fact that I don’t have a good track record for finishing books and 2) I have enough to read in the queue already.

§ A podcast reminded me of Modest Mouse, a band I hadn’t listened to since high school. “Teeth Like God’s Shoeshine” has got to be among the greatest opening tracks of all time. It feels reminiscent of the immediate contagious energy that instantly hooked me on 3D Country a couple of months ago.

§ We’re just over a week away from the solar eclipse and I am still trying to get a good understanding of how much madness to expect. What I do know is that hundreds of thousands of visitors are expected in northeast Ohio and I happen to work at the place that is hosting, quite possibly, the largest celebration for the event.

§ Links

-

§ I spent three days in Boston on a work trip.

- After I get through TSA—and hurriedly redress at one of those flimsy metal benches—I actually really like the airport. It’s like a weird sort of liminal mall where everyone shares the same special buzzy sense of purpose.

- Flighty is a good app.

- I didn’t see a single Vision Pro but everyone was wearing AirPods.

- A hour and a half long flight is maybe the perfect length. A good excuse to disconnect from the internet for a spell and focus on something else, for once.

- A turbulent touchdown really puts things in perspective. Thinking you might imminently die is a quick and efficient way to reorder priorities. This is probably why other people like extreme sports.

- How long will planes keep that little “no smoking” indicator? The plane I was on had a WiFi indicator so obviously updates have been made in the twenty-four years since smoking was banned.

- If there is anything that kills the Suite Life of Zack & Cody dream it’s that water pressure in hotel showers is always horrendous.

- Whenever I visit a city that has Apple Map’s Look Around feature I’m blown away by how good it is—dare I say it’s better than Google Street View. Maybe Cleveland will have it sometime before the heat death of the universe. Maybe.

- The MIT Museum was inspiring. It wasn’t until I was on my way out that I noticed that the MIT Press bookstore was in the same building. Of course I spend another hour checking out all that they have. Those two, together, could easily fill an entire day.

- Foolishly, it took me until my last day to venture over the bridge from Cambridge into Boston proper. Oh my god, Beacon Hill might be the archetype of the ideal American city. Dense, high tech, and historic with red brick sidewalks so uneven that you can’t help but to stay present in the moment.

- I heard no Boston accents my entire time in the city.

§ The Gentlemen came out of absolutely nowhere as a really great show. An electric British fusion of Succession and Ozark. Give it a watch.

§ Links

-

§ A big week. Mar10, Pi Day, the Ides of March, and St. Patrick’s Day.

§ If you don’t count the snow storm on Sunday, this has been the first full week this year that has really felt like spring. The wild daffodils are nearly blooming.

§ In response to the discussion of the terms used to describe different qualities on the most recent episode of RecDiffs:

- “One”: 1

- “Couple”: 2

- “Few”: 3-5

- “Several”: 5-7

- “Dozen”: 12

A “handful” is tricky. “A handful of almonds” might be ten but if something took “a handful of tries” that might be closer to four or five.

§ I updated my blogroll to use Micro.blog’s new “recommendations” feature. Check it out! This also means that there is now an always up-to-date OPML shortlist of my favorite blogs that you can import straight into your RSS reader of choice.

§ I got fitted for my wedding suit. It was intimidating but ultimately painless and less expensive than I feared. Good news all around.

§ Apparently cabbage is “having a moment”. It looks like I might be something of a culinary trendsetter.

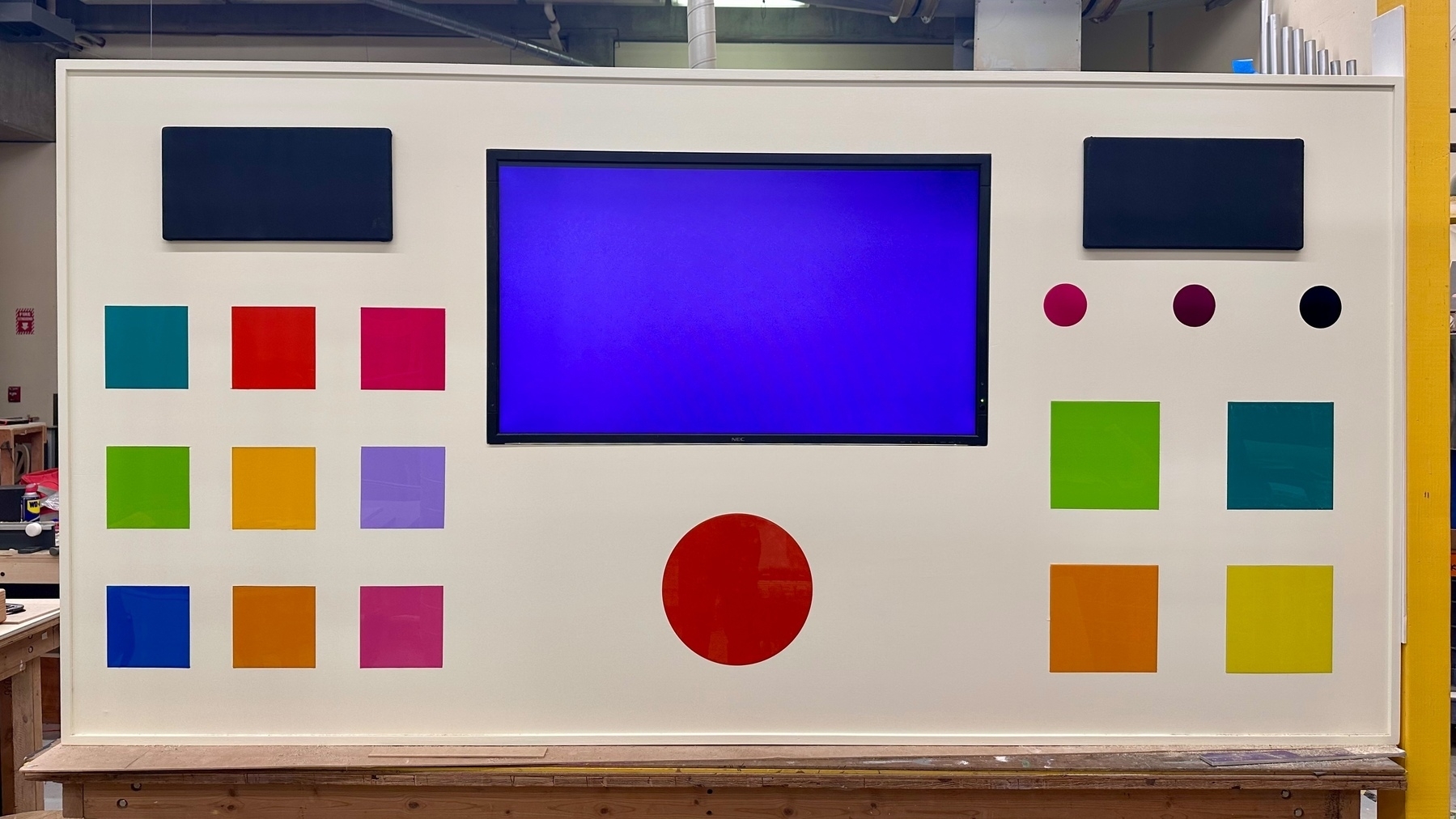

§ Lot of time spent digitally fiddling with different 3D JavaScript frameworks. We’re in the very early stages of building a new exhibit as work where children can assemble a “care package” for a loved one, learning how the U.S. postal system works in the process. I’m working on the digital side. The pitch is that, as a child adds each item to their package, they scan an embedded NFC tag which pulls up the corresponding 3D model of the item. After the package is “shipped off”, we can then generate a QR code that displays a little online preview of the items that were chosen.

I started with three.js, before remembering that everything is at least twice as complicated as it needs to be. I finally settled on A-Frame which makes much more sense, to my brain. Everything is just an HTML component:

<a-box>,<a-cylinder>, etc. My prototype is very rough around the edges but I’ve realized actually building the thing helps me think about it more than anything else.

§ T-minus, uhh, maybe eight weeks until I’ll be forced to learn how the DMX protocol works. So, look forward to that. I certainly haven’t been procrastinating on it in the meantime.

§ Dune: Part One was a treat to listen to on my fancy sound system. It was often visually stunning as well. Story wise, while it occasionally teetered over the edge into too much wacky scifi weirdness, it struck a good, relatively approachable balance most of the time. My biggest critique: it’s so long. I think it might be a bad habit pulled over from television. We don’t know how to tell tight stories anymore. Dune: Part Two is even longer, of course, but I’m excited to see it nonetheless.

§ Poor Things is one of the most interesting looking films I’ve seen in recent memory. Yorgos Lanthimos has clearly learned a thing or two about set design from Wes Anderson. The background of each shot is a treat. The switch from black and white to color was breathtaking. The costumes, the performances, the animals, the boat—captivating.

§ I’m off to Boston tomorrow—my first time traveling out of state in three years and my first time ever in that city.

Okay, I guess I better start packing.

-

§ I’m still riding out the long tail of my cold. Nevertheless, I went on a four mile hike on Sunday and then went back out to the same trail again on Monday to take advantage of temperatures 30°f warmer than average for this time of year.

§ I took advantage of the unseasonably warm weather to clean up the garden. I planted a huge patch of onion sets which were a surprising success as scallions last year and in a long raised bed next to the greenhouse I planted a variety of cold hearty salad greens: rapini, parsley, kale, spinach, and collards.

I also got a jostaberry cutting. I’ve never seen the fruit before. I guess it’s a cross between a blackcurrant and a gooseberry. I had always wanted to grow blackcurrants but didn’t want to risk spreading white pine blister rust. Apparently that isn’t a concern with the jostaberry.

§ I finally visited my local parrot shop because, well, it turns out we have a local parrot shop.

Wow, it has got to be the most overwhelming auditory environment I’ve ever experienced. Just imagine what it sounds like to have a hundred screaming macaws packed together in a tiny suburban strip mall storefront.

§ I didn’t think I was going to stick with it, but I’ve been using Apple’s Journal app daily for the past twelve-ish weeks now. It has found its own place next to Obsidian which I’ve been using to keep track of daily tasks for years. Journal is much more effective at reminding me what I did and how I felt on any particular day whereas each day’s note in Obsidian is just a bunch of checkboxes detailing what I had and hadn’t accomplished. I know I could combine the two and just keep track of all of this in Obsidian but I actually appreciate the split.

§ I got Casey Liss’ portable monitor. It is super cool. A single USB-C cable carries video and powers the monitor. Unfortunately this also led me into USB spec hell.

None of my Mac cables work at all. Same with the shorter USB C-to-C cord that came with my iPad. The tiny 4” cord for my external SSD kind of works but then the monitor only reaches 10% brightness. Apparently it requires a “USB-C 3.1 Gen 1” cable or higher but because USB-C cords lack any form of external identification my only options are to either methodically try all of mine or buy a new one to add to the pile.

I really love that the world is standardizing around a single plug shape. It occasionally feels like a maniacal genie’s malicious interpretation of that world, though.

§ I saw the world’s largest statue of Our Lady of Guadalupe which is, improbably, located deep in the farmlands of rural Ohio. It was striking, seeing it out there, towering above the tree line.

§ Links

- Two variations on a theme: carbonated showers and fizzy gravy

-

§ Wow, this February felt 3.57% longer than normal.

§ I found three eggs when checking on my quails Monday evening. By the end of the week, I filled up a carton with eleven more. This must mean spring is close, right?

§ James Blake’s new(ish) album Playing Robots Into Heaven is an interesting listen. It is more playful than his beautifully rainy self-titled debut and more upbeat than his melancholy follow-ups. Listening to Playing Robots feels like weightlessly floating through steamy neon-drenched alleyways. He shares a lot of new ideas, mixing in fresh voices, dipping into glitch. “Big Hammer” could pass for a wacky passage of a Kelman Duran DJ set, “Tell Me” is, like, 150 BPM, and “I Want You To Know” is just straight up Burial. It all feels very free and experimental while never dipping into the discordant. Blake rewards careful listening but I also wouldn’t think twice about putting it on during a party. I want more music like this. A big masterpiece of a painting is impressive but looking through a sketchbook can be exhilarating.

§ I’m digging the new Apple TV drama Constellation so far. It’s got “snowy mystery” vibes interspersed with deep space claustrophobia. The acting can be a bit kludgy at times but hey, it’s got Johnathan Banks so I can’t complain too much.

My biggest critique is the length. It all feels unnecessary drawn out. Too many characters weaving too many threads. I think they’re going to have a tough time wrapping everything up neatly. It could easily be a two hour movie instead of eight fifty minute long episodes.

I’m working on a pet theory that the show is connected in some way to the Calls universe.

§ For those keeping track, I’ve caught my first cold since October. I think my career choices have all but ruled out being one of those people who only gets sick once a year.

One more Apple TV recommendation: if you’re looking for some good, old-fashioned, mindless TV viewing for a sick day on the couch, Earthsounds is about as good as it gets.

§ My recent trip to the nature reserve has got me thinking about the horizon.

I’ve never traveled west of the Mississippi River. There is something alien and alluring about the desert landscape. Head out far enough and it’s just two colors, maybe a gradient. A blue and beige Rothko. I can only imagine sound travels differently out there in the expanse.

Here in the Midwest the horizon is all trees. Even in the winter, after they have dropped all of their leaves, it’s a challenge to see more than a mile out in any direction.

-

§ An antsy week with stints of wanting to re-arrange my house and take a vacation. On Saturday I was this close to joining a rock climbing gym. I think it’s a kind of low-grade cabin fever. Later in the week, I stopped by my favorite trail deep in the local nature reserve. No signs of life yet, only frozen mud and creaky tree branches. After spending so much time cooped up inside, I appreciate the enormity of the park more than ever.

§ I put together a little project on a spare Raspberry Pi that displays photos of the earth using NASA’s API. New photos aren’t added as often as I would like—it updates every few hours, at most. Still, it is incredible to have an always-on “live” view of the globe right here on my desk.

§ Picture this: it’s Thursday night and a new exhibit gallery is opening early the next morning. At the last minute one of the technicians realizes that a critical microcontroller needs to be reprogrammed. But oh no! They don’t have an FTDI bridge with them. It’s at this moment that I suddenly remember I have a Flipper Zero in my backpack that I’ve been carrying around, basically unused, for years. I’ve never felt geekier.

Apologies to all of the Canadians out there.

§ The Bezzle, Corey Doctorow’s sequel to Red Team Blues is out. The audiobook sample he released last month didn’t exactly hook me. The protagonist, Marty Hench, has an overconfident, self-righteous, cynical worldview that I find off putting. Still, I backed the Kickstarter a while back so I have the MP3 files waiting for me right here in my inbox. I’ll give it another try soon.

§ I started watching The Deuce, David Simon and George Pelecanos’ lesser-known follow-up show after The Wire. Is it as good as The Wire? Probably not, but it feels similarly ambitious.

Simon and Pelecanos clearly have a knack for writing believable cities, cities that continue moving after the cameras stop, cities that are alive. My mind keeps going back to Synecdoche, New York. It is almost as if Simon and Pelecanos spend years toiling up front, crafting characters and meticulously developing atmosphere, before finally casting some sort of ancient spell that sets everything in motion so now they can then just leisurely stroll down the street and document at will, free from the need to direct. As if there is some impossibly large sound stage hidden in the desert outskirts of LA and if you can manage to find your way inside you’ll find D’Angelo Barksdale sitting right there on his orange couch.

§ Links

-

§ Lot of experiments with projection mapping this week. MadMapper is such fun, powerful, mature software. I spent a solid few hours just playing around with the real-time audio reactivity features Friday afternoon.

The one big downside is that it only runs on MacOS and Windows. I have a hard time trusting either operating system to run headless for a long time without requiring occasional intervention. Nevertheless, I think I’m going to make heavy use of it for my next exhibition.

§ OpenAI announced a video generator, Sora, hot on the heels of Google’s Lumiere model. Right off the bat, I have to say OpenAI’s sea turtle is way more impressive than Google’s.

These new video models still have some of the fun surprise moments that AI images generators used to have back in the GAN days but, overall, OpenAI, Google, and other large AI labs are all waiting to publicize their work until it is much more polished. It is an understandable decision but kind of disappointing too. Unpredictably spurs creativity.

§ My new passport arrived in just under a month—I’m pleasantly surprised.

§ Following the recommendation of James Reeves I started reading The North Water. The language is visceral, grimy, and raw in a way that keeps reminding me of Ulysses.

§ Last year, after Apple introduced the Vision Pro, I said the EyeSight feature was “the defining innovation” of the headset. I want to put another stake in the ground now that the headset is out in the world and say that I still stand by that statement. Yes, the outward facing display has lower fidelity and is much darker than Apple’s marketing lets on but I think it is still a crucial feature. Meta’s Quest 3 looks silly, like a chunky plastic blindfold, in comparison.

§ The opening credits of True Detective: Night Country continue to be the best part of the show. Everything else… eh.

§ Caroline and I went out for a walk along Lake Erie on Saturday and left with icy ears and chilly driftwood as souvenirs.

-

§ I attended a Vision Pro demo at my local Apple store on Tuesday. Over the eight months since its announcement I had absorbed so much information about the device. I had a lot of abstract knowledge about the technology involved but no idea what to actually expect once the device was on my face. Would my Mac forever feel like outdated technology? Would I start scrounging around for $3,500 to spend on my own headset?

My immediate impression was that the pass-through video was surprisingly bad—blurry, weird white balance, and noticeably grainy. I didn’t get the opportunity to stand up and walk around however I got the impression motion blur might make that a nauseating experience. The field of view was limited too, with stark black vignetting in the periphery. There were a few large posters on the other side of the Apple store that I could read perfectly fine without my glasses that were too blurry to decipher in the Vision Pro with the Zeiss prescription lens' inserted. Maybe my glasses were scanned incorrectly?

On a more positive note, the eye and hand tracking were both totally impressive. It’s kind of weird to use your eyes as a curser but I think it would become perfectly intuitive with practice. It felt like magic, honestly.

As I mentioned above, I did not get the opportunity to stand up, walk around, and re-position windows in space—ya know, Spatial Computing—which is disappointing because I think it is the new idea here.

At the end of the day, maybe this subpar experience is for the best. I’m not going to have any trouble whatsoever sitting out on this initial model.

§ The days are getting longer, the sun now sets after 6:00pm.

A sunny Sunday gave me the chance to do a big mid-winter deep cleaning of the greenhouse for the quails. As my hobbies evolve to be increasingly outdoor, the winters have felt more and more inconvenient. I was super thankful for the opportunity to spend an afternoon outside. I also took a moment to look for signs of life in our garden. The garlic I planted back in November is all beginning to sprout.

The warm weather has brought with it periods of intense, high velocity rain. Drops that could bruise, tenderizing the earth in preparation for spring.

§ True Detective Night Country, at first glance, feels like Fargo only much more self-serious. I’ve realized I’m attracted to the atmosphere of cold snowy mysteries and polar horror—see: A Murder at the End of the World—that said, I don’t find this season compelling at all. I keep watching and re-watching the first four episodes thinking I must have missed some important plot point but I haven’t, nothing coherent has happened and it all comes out feeling like a confused jumble of prestige TV tropes. The edges have been polished off, it is so smooth that there is nothing to hold on to, nothing to make an impression in my memory. It triggers some kind of immediate media amnesia.

§ Mr. & Mrs. Smith feels inventive and fresh. It is funny enough to not feel too heavy while serious enough to not feel frivolous. Unfortunately I don’t think they quite stuck the landing but it was totally worth the ride.

§ Links

- A “drawing toy” inspired by Peter Vogel—“Instead of the toy making a drawing, it is a drawing that you can re-make every time you interact with it.”

- Cat itecture

-

§ I would like to see hardware make a comeback this year. The past decade has been full of increasingly homogeneous gadgets with software differentiation. We might be in for a change soon though. Apple’s Vision Pro is here, the Rabbit R1 was the CES standout, OpenAI is said to be in talks with Jony Ive to create hardware for AI, Humane’s Pin may or may not prove successful but it is inarguably ambitious.

One thing to keep in mind when evaluating all of these new gadgets: smartphones have been more or less perfect for the past five years. All of these new devices will be weird and unrefined in comparison. That’s okay though, that’s the exciting part.

§ Speaking of, Vision Pro reviews are out. The verdict? Exciting paradigm held back by the current state of hardware. I am most interested to see the story nine months from now. Will most Vision headsets be pushed to the side, off into drawers full of other forgotten technologies? Will it find a foothold in popular culture as an expensive, coveted status symbol like the original iPhone?

Ok ok, I’m already getting sick of all of the Vision Pro coverage so I’ll leave it there for now. That said, I booked an in-store demo for next Tuesday…

§ Ok, one last adjacent topic: I tried Crouton, the cooking app featured prominently in Joanna Stern’s Vision Pro review. The iOS interface has a hands-free wink navigation mode. Close your right eye to navigate forward though a recipe’s instructions, close your left eye to go back. It is a ridiculous gimmick but also surprisingly practical. Particularly, I imagine, if you are more deft at winking than I am.

§ I passed my 100 day streak of completing Puzzmo crosswords. I’ve fallen out of the habit of doing any of the other Puzzmo games with much regularity but I still have a lot of affinity for the service. There is something quaint and straightforward, almost Web 2.0 about it.

§ I resubscribed to Stratechery after canceling around mid-December. I realized that, although Ben’s views on business and government regulation sometimes annoy me, I was missing the work he does with others—particularly Dithering and his interview series.

§ I read the first few chapters of Michael Lewis’ Going Infinite after reading an interesting (though long) review of the book by Zvi Mowshowitz.

Lewis faced a lot of criticism when the book was published for being too sympathetic towards SBF. None of that criticism feels particularly warranted though. Lewis paints Sam as unempathetic, supercilious, and cold. In fact, Sam comes off as so unlikable that I’m finding it hard to stick with the book. If not for the sheer craziness of the story I probably would have dropped it by now.

§ At work we started brainstorming our next big exhibition, slated for June. We’re leaning towards Life in Space. Think: geodesic domes, inflatable habitats, 3D printed architecture, programmable lighting to simulate a day-night cycle. This is one of my favorite parts of the creative process, where it’s all nebulous vibes and ambitions ideas.

§ The sun shone on Thursday for the first time in more than a month. Welcome back.

§ Links

- Iconfactory’s Project Tapestry

- In Loving Memory of Square Checkbox

- Continuing the theme: In Praise of Buttons

- Lenticular photos

-

§ I participated in a focus group discussing ways to help hands-on interactive museums design great engineering challenges. It was fun to meet a bunch of creative peers from around the country. One thing we all agreed on—no one ever wants to read signs. Which, sure, obviously, but then someone in the group mentioned something that really set off a lightbulb in my head: traditional exhibit signage can inhibit guests analyzing, hypothesizing, making meaning themselves. There is no need to think when the sign can give you immediate answers.

So, good exhibit design should be intuitive which should negate the need for pre-interaction instructional signage. Then, afterwards, you want to leave some time and space for contemplation before revealing “how the magic trick works. How do you pull this off? I’m not sure, yet, but it’s an exciting challenge.

§ I got the tickets for my upcoming trip to Boston. It is a city I’ve always wanted to visit—one I almost stopped in during a road trip to Rhode Island a few years ago. I’ll be there in late March so I’m expecting weather somewhere between an apocalyptic polar vortex and balmy 60s.

§ Speaking of balmy, due an HVAC quirk my corner of the office has been in the mid-80s all week. I kept finding myself in a sort of dazed half-conscious stupor. It turns out my brain effectively stops working in those temperatures.

§ Caroline and I hosted my dad for dinner on Sunday and then her sister on Monday. We made butter chicken both times—an instant hit.

§ My current rewatch of The Leftovers has been hampered by the realization that it is basically a vehicle for making characters less likable.

- Kevin, the protagonist, was deeply unlikable from the start.

- Nora was great in the beginning, she single-handedly carried season two on her back, bringing much-needed pathos to the story. By season three she’s more spiteful and depressing than anything else.

- Father and daughter John and Eva Murphy started off season two totally normal, stable people. By the end of the season, Eva has joined a cult and John has tried to murder multiple people.

- Matt might be the single exception, becoming significantly more likable as the show progresses.

Don’t get me wrong, the writing is good—Damon Lindelof wrote unlikable characters well. At the end of the day, though, it doesn’t add up to something I get too much enjoyment out of watching.

§ I started on Reservation Dogs. It’s a shame it took me so long to get around to it. A unique premise! Thirty minute episodes! It is a comedy so the characters can be caricatures at times but they are also real, recognizable, human.

§ If we’re talking media, though, I would be remiss if I didn’t tell you there is a new Longmont Potion Castle album out. I wish I knew how to introduce others to LPC but it might be impossible so instead I will leave you, curious reader, with a sample.

§ It has been foggy here in Cleveland for two days straight.

§ Links

- Why You’ve Never Been In A Plane Crash—“It’s often much more productive to ask why than to ask who. In some industries, this is called a “blameless postmortem,” and in aviation, it’s a long-standing, internationally formalized tradition.”

- Downpour, a new game design tool reminiscent of Twine

- The boredom device—“Sort of like TikTok, but in reverse.”

- M.G. Siegler loves the AirPods Pro volume swipe gesture. I, on the other hand, find it totally unintuitive.

- Winnie Lim’s sketchbook

- Restaurant menu design trends

-

§ Temperatures dropped by twenty degrees overnight and then stayed in the single digits all week. There was really no wind either. It was just instantly, persistently cold. Everything was in stasis, frozen in place. Hey, at least I’m not in Calgary.

We moved the quails into makeshift housing in the basement. They probably would have been fine in the backyard greenhouse but it would have required more frequent check-ins to make sure their water doesn’t freeze.

§ Speaking of, Lake Erie froze for the first time this season. The gulls are not pleased.

§ As warned, Monday was a particularly busy day at work. All told it was five times the traffic of a typical day. It ended up feeling a bit anticlimactic though, after all of the nervous anticipation leading up to the day I was looking forward to more drama and excitement. There is always next year.

§ On Tuesday a group of former students came to check out the exhibit I designed. It was great to see them again and to get their perspective on something I had worked so hard on.

§ I found Casey Newton’s writing on Spotify’s role in the demise of Pitchfork insightful:

For the most part, and particularly in the early days, Pitchfork focused on music that you would almost never hear on the radio. And to the young people it served, for whom $18 was a lot of money, the publication provided a valuable service. By highlighting music that was actually worth spending money on — and calling out music that was not — it helped its audience expand its musical horizons and save money at the same time. It was the Wirecutter for music, long before the Wirecutter was even born. […]

The most important change arrived in 2006, when Spotify was born… Before Spotify, when presented with a new album, we would ask: why listen to this? After Spotify, we asked: why not?

§ While we’re on music, there is no other way to say it: 3D Country kicks ass. It’s like if Thurston Moore, Frank Zappa, Alec Ounsworth, and Yamantaka Eye formed a supergroup.

Also, after reading recommendations from Tracy Durnell and James Reeves I finally gave Orville Peck‘s album Pony a listen. It hasn’t fully clicked with me yet but the more uptempo top half of the album is undeniably fun.

There is an interesting thread connecting these two albums. An emerging genre. Let’s call it Millennial Americana for lack of a better name. I’ll be on the lookout for more examples of the theme.

§ I have a soft spot for sound machine apps. There are often occasions where I would like to have some kind of audio on in the background but I’m not in the mood for any of my usual music, podcasts, or audiobooks. Not Boring Vibes, a poorly named but well designed app seems to be purpose-built for just this.

When you launch the app it generates an ambient soundscape based off of the time of day and your physical activity. The music slows down and gets increasingly ethereal as you wind down in the evening. If you decide to go out for a walk it will detect your movement and pick up the beat a bit. It is a lot like the new feature Mercedes recently previewed for their cars but, well, with less Will.i.am.

I like that my favorite “white noise” app always sounds the same—there is a comfort to that. Nevertheless, I find it fascinating that Not Boring Vibes always sounds different.

§ After passing the halfway point of The Mountain in the Sea I’ve started getting melancholy thinking about what I’m going to read after I’m finished. Well, I somehow didn’t realize Ray Nayler just this week published a new novel The Tusks of Extinction. It looks like it shares a lot of themes with Mountain. I can’t wait.

§ The Curse season finale was the hardest I’ve laughed in recent memory. I’m looking forward to re-watching the whole series soon. No single episode was perfect but, taken as a whole, it was ridiculous, surreal, hilarious, awkward, and intelligent to an extent I’ve never seen before.

§ Links

-

§ All rain all week. Everything is damp. My boots squish with each step, the hallways at work are littered with strategically positioned buckets to catch stray drips. Late-evening cold spells crystallize each droplet into tenuous waterlogged snowflakes that melt on contact into thick layer of half-frozen phlegmy slush. It is beginning to feel like Cleveland has a monsoon season more than a proper winter.

§ It occurred to me that I haven’t listened to any episodes of MBMBAM since The Naming of 2023. Still, that doesn’t mean I wasn’t excited to see The Naming of 2024 pop up in my feed. Maybe a once-annual cadence is just right, at this point.

§ I discovered that my passport will expire one month before my wedding. While my fiancée and I are still in the process of planning what exactly we will do for a honeymoon, I’ll need to renew it to keep all of our options open. Dear reader, when was the last time you had to renew your passport? You might expect it to be a process that has been streamlined by technology over the past few decades—it is not. Where is the USDS when you need them?

§ Apple announced that they will begin shipping Vision Pro headsets early next month. I’m confident that, in a year or two, the next iteration of the device will be both an order of magnitude better and an order of magnitude less expensive. Nevertheless, I can’t wait to read reviews and, maybe, try one out in-store.

§ I am really enjoying my trial 37signals’ new calendar, particularly their addition of “sometime this week” miscellaneous tasks. Unfortunately, the only way to use it is with a Hey email account which I am not interested in switching to. I hope that they spur some innovation from others though. I’ve mentioned before how sad I find the current state of calendar applications and I think this is a a step in the right direction.

§ I have no connection to kaiju flicks. Still, I found Apple’s new marquee show Monarch pretty compelling. It can definitely get a bit silly and overwrought at times, particularly in its more monster-centric scenes. Overall, though, the characters are good, the acting is good, and the CGI is great.

§ Speaking of TV, The Great Pottery Throw Down has been the perfect low-key show to pair with a fairly hectic work week. I’ve gotta say, I don’t appreciate the change of judges with season three though.

§ For the sake of public accountability I should note that I am just about halfway though The Mountain in the Sea, a hair into part three. I’ve been averaging a slow but steady pace of around one hundred pages every two weeks. Not voracious by any means but I’m glad I am sticking with it.

§ Lots of excited, ominous talk at work warning that next Monday, MLK day, is always our busiest day of the year. Wish me luck.

-

§ 2024. A leap year. The other night I had the famous dream where I found a secret extra room in my house. Leap years are sort of like that but for time, in a sense.

§ For the second year running I cooked bibimbap for my extended family’s New Year’s Eve celebration. It’s getting dangerously close to becoming a new tradition.

§ I kept reading good things about Rebecca Owen’s Chronicling app in various “best of 2023” lists so I decided to give it a try. Absent anything particularly useful to track, I landed on logging my caffeine intake. Now, this could be some sort of bias where seeing the chart each day reinforces a particular pattern but I was shocked by how consistent I am day-to-day. A latte when I wake up, coffee late morning, tea after lunch, tea pre-dinner, and tea in the evening. I would have thought there would be more variation but there really isn’t. I’m a creature of habit, I guess.

§ One of our quails laid an egg for the first time since late autumn. They have been picky recently, basically eating only their fancy marigold treats so it’s really the least they can do.

§ I designed and 3D printed a few candle holders—the next step on my strange bees wax candle collecting journey.

§ I’ve noticed that I’ve developed a very midwestern reflex of saying “excuse me” as I pass by coworkers in the hallway. Actual distance is irrelevant—I could be ten feet away. It’s more like an alternative to a polite greeting.

§ A sneak preview of this new season of weeknotes:

- Week 12 — Visiting Boston for the first time

- Week 15 — Total solar eclipse

- Week 20 — My birthday and also maybe visiting Baltimore for the first time

- Week 26 — One year anniversary at my current job

- Week 28 — My wedding

- Week 46 — A long overdue visit to Chicago

§ Links

-

§ The last week of the year, huh?

§ I got Caroline a Playdate for the holidays.

After being disappointed by the build quality of Teenage Engineering’s EP-133 I was nervous about the Playdate. I’m happy to say that Heavy use over the past week has assuaged my fears—it is both well-constructed and absolutely adorable. Root Bear was an instant hit during all of the holiday get-togethers.

Caroline gifted me a Combustion Inc. meat thermometer which is, coincidentally, the exact same shade of yellow as the Playdate. I’ve only had the opportunity to try it once, briefly, but it seemed great. I think it will become particularly handy come grilling season.

§ Panic really won me over with the Playdate so I downloaded Untitled Goose Game on a whim. There is a lot to like—it is chock full of little puzzles and silly mischief. I ran into a lot of issues running it on my Switch, though. The camera and character movement were both frequently frustrating. Still, that didn’t stop me from beating the game in one marathon afternoon.

§ Christmas Eve and Christmas day was comprised of fourteen different events across northeast Ohio. Can we all just agree on one time and place next year?

§ Post-Christmas I spent a few days exploring parts of Ohio I hadn’t visited before. I ended up seeing two taxidermied moose heads in as many days. The first was next to a candle making facility that has been in operation since 1869. As you might imagine of a 150 year old candlery, the fragrance was overwhelming. The second was at the offices of a local tea producer.

§ I was finally able to get a plumber to install our new dishwasher. It is one of those models with no visible buttons on the front panel which seems to be in vogue. I think it looks nice but I did quickly learn that the downside is that there is no way to know how much time is remaining on a particular cycle.

§ Fargo is really gunning hard for the best TV show of 2023. This week’s episode was particularly great. The puppetry scene reminded me of the wild animated act in the middle of Beau Is Afraid.

§ Astroid City was odd. It’s as inspiring as all of Wes Anderson’s work but it ultimately rang a bit hollow. It was all maybe too artificial—too flat, precise, rehearsed. Everything was deadpan, robotic, and perfect but stripped of all emotion. Charles Pulliam-Moore, reviewing the film for The Verge, describes it as “exquisite and soulless”—apt. Its density would, I’m sure, reward repeat viewings. I’ll probably give it another shot sometime.

§ I finished reading part one of Mountain in the Sea. I didn’t realize how much I appreciated that Penumbra stuck with one character’s perspective for the length of the novel. Jumping between multiple perspectives, which Mountain does frequently, is often the reason I abandon novels partway through. There are inevitably characters I am less invested in and sometimes getting through those passages can be a slog. It isn’t a big problem yet with Mountain though. I am still engrossed with the story and the troublingly plausible dystopian world Ray Nayler has crafted. I would have preferred he stuck with Ha Nguyen‘a central storyline though.

§ See you next year.

-

§ Happy winter, officially. From here on out, each day will be just a little bit brighter.

§ I read Mr. Penumbra’s 24-Hour Bookstore by Robin Sloan. Given my history of starting and then never finishing books, I’m happy to have finally broken the trend.

Penumbra wasn’t a particularly profound book but it was a bunch of fun. I quickly got sucked into Sloan’s world full of full of conspiracy, cryptography, and history. The characters, while maybe a bit cartoonish, are genuine. There is really no cynicism, irony, disillusionment, hatred, or violence. Everyone—including the antagonist—is driven by their desire to do the right thing. Everyone agrees on the goal, the conflict arises from the means to get there.

The Mountain in the Sea is next. Nayler‘s writing conjures a vivid atmosphere. The first few chapters are rainsoaked. Crumbling towns punctuated by flood lights and occluded by mist. Entropy and decay and technological progress. I’m excited to read more.

§ Listening to the Myke and Grey’s yearly themes episode is making me consider how I want to approach the new year. Resolutions have never been my thing but choosing a “theme”—a general guiding principle—seems like a smart idea.

I’m leaning towards “the year of curiosity.” Curiosity, I’ve begun to realize, follows a natural curve over the course of most people’s lives. At birth we are infinitely curious, everything is new and everything is interesting. But, as we get older, the curve slopes downward and we gradually become less interested in the world around us. A new way of solving a problem is probably worse, there is no need to learn how something works because that is someone else’s job, change is to be avoided, etc. Curiosity takes practice. It takes intention.

§ I went to the Screw Factory holiday market. One Cleveland’s biggest assets is its strong artist warehouse community. 78th Street Studios, Lake Effect Studios, the ArtCraft building, Hildebrandt. The holiday market was really my first excursion out into that world since the pandemic. I’m glad to see it’s still going strong.

§ I’ve never had to rely solely on a map for navigation. I got my driver’s license in the era of standalone GPS systems. Am I missing out on some forever lost freedom or does the knowledge that I have an always-on satellite connection give me the confidence to adventure more?

§ A Murder at the End of the World unfortunately continued to feel directionless up to and through this week’s finale. The show was ultimately a whodunnit murder mystery which is a fine premise, I guess. The problem is 1) the cast of characters—the potential perpetrators—has no depth. Viewers can’t theorize motives when each character is little more than a caricature. That ultimately doesn’t matter much though because 2) in the end the killer is revealed to be someone that would be impossible to devise though clues left earlier in the show—breaking all of the standard fair-play mystery rules. I didn’t hate A Murder but I had much higher hopes coming from the Brit Marling / Zal Batmanglij power duo.

§ I picked back up on a project from July—a giant interactive capacitive touch synthesizer. I plan to get all of the little final touches done first thing next year.

§ Links

-

§ I got the opportunity to break out my calipers and fire up Fusion 360 to make a seriously intricate CAD model for the first time in a while. Two pieces that snap together with some space between to weave through circuitry. The two parts, together, are then threaded to screw into an existing PVC pipe fitting. I found the whole process—observing, measuring, building out parametric constraints—tickles my brain in a way similar to solving a solitaire deck. Super satisfying.

I couldn’t get the threading quite right though. What standard does the existing pipe conform to? BSP, ANSI, ISO, DSN, GP, JIS? 3D printing still makes for a slow iteration process so trying each option was infeasible. After spending at least an hour getting totally lost in the weeds I realized I can eliminate the pipe connection altogether and just redesign the component as a single solid piece. I need to be more vigilant for these rabbit holes in the future—zeroing too far in on a complicated detail should be a cue to take a beat to look at the problem from another perspective.

§ On Saturday I visited a local photographer’s downtown studio. The building he is in was recently sold so now he has until the end of the month to get rid of 50 years of accumulated film photography paraphernalia. It would have been a goldmine if I were putting together a darkroom but unfortunately I just don’t have a suitable place in my house for one at the moment. Nevertheless, it was really cool to see his collection—especially his large format panoramic view camera that shoots six foot long negatives.

§ iOS 17.2 includes Apple’s new Journal app. I’m not sure I am ready to commit to a new daily routine but I am intrigued by the app’s ability to collate data from across Apple’s various services.

It feels very much like a minimum viable product at the moment—it’s missing some important features like search and export—but it has good bones. I have a feeling it’s going to be one of those apps that quietly but consistently gets better over time.

§ My new EP–133 K.O. II finally arrived.

The good:

- It is a big tactile gadget with knobs, dials, buttons, and switches—a rarity nowadays.

- The documentation is thoughtful and thorough

- It’s powerful—it’s going to take me at least a year to get comfortable with all of its various features—while still having a low enough ceiling to be fun right away.

The bad:

- It arrived with a broken speaker which, I guess, is a common issue.

- Almost immediately after I fixed the above issue I accidentally erased all of the pre-loaded samples. It took a tedious 30 minutes to add them all back on.

I was ultimately able to fix the speaker, and I’m still glad I got the device, but it put a bad taste in my mouth in respect to Teenage Engineering’s quality control.

§ I have, so far, four work trips scheduled for next year. Plus a honeymoon sometime mid to late summer. I’m sure it will feel hectic and busy in the moment but I’m beginning to learn that my happiness is often positively correlated with how busy I am. Presumably at a certain high level of busyness the correlation would totally fall apart but I haven’t found that level yet.

§ Have you ever thought about how the saying “head over heels” really doesn’t make any sense? As best I call tell the more logical “heels over head” was common and, over time, it morphed into the phrase used today. Why though? Because the latter sounds better? Does ”head over heels” actually imply a 360° rotation, more dramatic than the original’s 180°?

§ Go ahead and get yourself a nice heavy sweater to fortify yourself against the approaching winter. It’s absolutely worth it.

§ Links

-

§ This marks my first full year of writing these weeknotes. I expected them to be a catalyst for introspection, and they have been. It is great to have a dedicated space to catalog the things I did, learned, noticed, and felt each week.

What I didn’t expect, and where I think they actually deliver the most value, is as a bulwark against the acceleration of time as I get older. Thinking back two years ago, for example, I couldn’t possibly pick out particular events from each week of the year—the past is all a big blurry jumble with just a handful of well-defined signposts. Over time I suspect I will get even more value out of these notes for exactly this reason. What, specifically, do you remember from, say, August 2012?

§ “Fall cleaning” has always felt more urgent and necessary than “spring cleaning” ever has. Now is the time to get the house comfortable to hunker down in for the winter. Come spring, all of my energy will be directed outside, sprouting seeds and preparing the gardens for summer.

§ I’ve recently come to appreciate the importance of letting creative ideas marinate for a while. Whenever I have even an abstract notion for a new project I’ll immediately explore it just enough to lodge it in my memory. Usually all that is required is making a page for it in my sketchbook. Other times I’ll make a rough prototype and shove it in a corner somewhere. All that’s important is that the idea has a firm hold somewhere in the back of my mind.

Later—sometimes years later—the idea will spontaneously re-emerge better than ever. During the intervening time my mind had been developing the idea for me in the background, working out the kinks and making connections to everything else I’d experienced along the way.

§ Overhead projectors continue to be one of the most interesting pieces of technology that are all too often overlooked. Like, come on, when was the last time you saw a liquid light show?

§ My fiancée didn’t know that I had the photic sneeze reflex until it came up as a minor plot point in this week’s episode of A Murder at the End of the World. The most reliable trigger, for me, is walking out of a movie theater into bright daylight. I guess it has just been ages since we’ve seen a movie in theaters, let alone one midday.

§ The new Squid Game reality show was, frankly, not particularly good. Nevertheless, it is remarkable how quickly I became invested in the contestants.

-

§ There was a big winter storm overnight Monday. All of the schools were closed the next morning. I had been procrastinating on winterizing the greenhouse for the quails but they did totally fine even with temperatures in the low 20s. I ran some power out there and wired in a heat lamp for the time being. The vibrant orange glow illuminating the greenhouse at night makes it look like some kind of tiny winter rave hut. Or, perhaps, like it is just a little bit on fire. Either way, no calls from my neighbors yet so that’s good.

§ According to Apple Music “Replay” I listened to 6,621 minutes of music this year—four and a half days, basically. Just wait for my Overcast stats. I have a feeling those are going to be… revealing.

§ I am currently obsessed with the idea of articulated furniture. What happens when you cross a folding seat with a strandbeest?

§ I transformed a dingy old basement shower into a tiny grow room complete with full-spectrum lights that automatically turn on and off using smart switches. I made my lemon and lime plants a home there for the winter. For some reason they are both flowering now? Odd.

§ Aside from a few of the extra challenging “special world” levels, Caroline and I have just about 100%’d Super Mario Bros. Wonder. Overall, it is definitely easier than DK: Tropical Freeze but no less fun. I was originally on the fence about buying the game—I’m totally glad I ultimately did.

§ The new season of Fargo has been excellent so far. It feels like the show runners went back to the basics to an extent they hadn’t since season one. I can’t wait to see where it goes.

§ On the other hand, I’ve got to say A Murder at the End of the World is starting to loose me a little bit. I’ll still continue watching but it feels a bit directionless at the moment.

§ I saw romanesco broccoli for the first time. How did I never know about these?! They look wild.

§ Links

-

§ Happy Thanksgiving

§ What would a synthetic cairn look like? Can we take anything from the elegant idea of stacking stones as a place-marker and apply it to collaborative sculpture? Build together with everyone who crosses through a specific site over time. Inevitably someone will dismantle it all but that can be just as poignant.

§ I wrote up a list of exhibit design challenges for an upcoming collaboration with a student group.

Create…

- an exhibit that requires cooperation between multiple people

- an exhibit that is meaningfully different each day

- an exhibit that cleverly communicates the passage of seconds

- an exhibit that cleverly communicates the passage of years

- an exhibit that rewards careful observation

- an exhibit that can be enjoyed equally by both blind and sighted guests

- an exhibit that is site-specific

§ New appliance alert! While 2023 is nearly over, we couldn’t let “the year of new appliances” go by without replacing one more faulty appliance.

First it was our washing machine, shortly after, our refrigerator stopped working, a few months later it was our oven and now, finally, we are replacing our shoddy dishwasher. Delivery is December 6th so I can’t say much more until then but I’m frankly pretty excited.

Let’s all hope for a better theme for 2024.

§ With my focus on the kitchen, I decided to take care of something else I had been interested in for a while—I replaced my faucet and added an under-the-sink water filter unit. Even though it required a fair deal of awkwardly craning my body and sticking my head underneath my kitchen cabinets it was all a much easier job than I had feared.

§ I started watching Brit Marling’s new miniseries A Murder at the End of the World. You might remember her as the creator and star of the captivating and brilliantly weird show The OA a few years ago. The new series is ongoing with only three episodes out at the moment but so far, so good.

I’m also, of course, now re-watching The OA. It is such a special show. Completely weird but unfailingly committed and self-assured. A Murder at the End of the World doesn’t yet have quite that same spark but I have full faith that Brit Marling and Zal Batmanglij can deliver something special.

§ I ordered Teenage Engineering’s new EP–133 K.O. II sampler. It looks like a very beefed up version of one of their Pocket Operators, a series of devices I absolutely adore. I can’t wait to try it.

§ Checking out the album-centric music app Longplay reintroduced me to an old favorite album from a few years ago.

The album Woodkid For Nicolas Ghesquière—which was produced for a wild 2019 Louis Vuitton fashion show—is pretty darn close to a perfect record. I would love to find more music like it but I can’t quite articulate its genre cogently enough to search for similar artists. It is big, orchestral, and percussive. It has sparse but compelling vocals. It is overwhelmingly analog but it is not afraid to use digital manipulation where it is effective. Even Woodkid’s other music isn’t quite comparable. Tyondai Braxton’s Central Market feels like a sibling but other than that I am coming up blank.

§ Sam is back and I have a new least favorite word: ouster. I never want to hear the word “ouster" again.

§ Links

-

§ Had a big meeting to kick off a two year collaboration with the Alliance of Immersives in Museums. Got to meet a bunch of techy creative people from interactive science museums around the country.

A big topic was how to balance the flexibility and speed of pure immersive expirerencs with the unique possibilities that can only arise from traditional tactile exhibits.

§ How can desire paths be used in interior design? Do you keep the space reasonably empty and then add affordances—benches, walls, signage, trash cans—only after a period of careful observation? Do you make a reasonable initial guess and then remove and rearrange later? Is there some kind of carpeting that can reveal the paths tread later, perhaps under some special light?

§ I bought the new 2D platformer Super Mario Bros. Wonder, hoping to capture some of the magic from the surprisingly excellent Donkey Kong Country: Tropical Freeze.

- The local co-op play is great. Had I not been spoiled by Tropical Freeze’s ingenious backpack mechanic I would be completely happy.

- Doing away with time limits was the right call. Now it is time to rethink the coin / life model. Celeste has had the right idea here all along: unlimited time, unlimited lives, the challenge comes from clever level design.

- In general it has been a really satisfying bite-sized game. Each level takes maybe five minutes or less so it’s easy to pick it up for a quick round during a moment of downtime.

§ I’m trying out Arc, a new-ish web browser that feels more like an operating system for the internet. It is weird and slightly fascinating.

A giant downside: their mobile app is awful. You can only open one tab at a time in the most unintuitive way imaginable. It is the opposite of the old Apple design adage of “it just works”. Although apparently a new, fully-featured mobile app is under development. Until that arrives I’ll probably stick with Safari for most of my browsing but I am grateful Arc is here an unafraid to throw away all assumptions on what a web browser can be.

§ I was hopeful OpenAI would decrease the price of their ChatGPT Plus subscription in the near future. $20 per month is a truly wild price. It is my most expensive subscription save for my cellular data plan. It says a lot that it is even remotely worth it at that price. Well this week OpenAI decided to pause new signups due to a “surge in usage” so the possibility for a price decrease any time soon feels pretty remote.

§ But also! The OpenAI board unexpectedly fired their CEO and co-founder Sam Altman on Friday. In response, their other co-founder Greg Brockman and at least three other senior researchers quit. So who knows! Anything can happen at this point!

§ I got a new Creality CR-M4 for work. I can’t attest to the quality yet—I’ve only had time for a couple of small test prints—what I can say is it is giant. Effectively an 18x18x18" build volume.

§ Links

- Unused three letter acronyms — there are a lot of them

- A blinking LED to assist with maintaining focus?

- Watt lies beneath

-

§ Some seriously smoky steak searing prompted me to check on my smoke detector. It turns out it doesn’t work and probably hasn’t for years.

I ordered a new one. Thanks smoky searing!

§ In other food news, I had three—count ‘em three—different soups for dinner this week. Chili verde, chili con carne, and kapusniak. Is this a sign that autumn has truly arrived?

§ I am beginning to realize that I enjoy creating objects, structures, and opportunities that facilitate self-directed learning more than I enjoy traditional teaching (“direct instruction”). This is all sort of a vague abstract notion at the moment but it was an interesting realization nonetheless.

On a very related note, the jungle gym turned 100 this year. I would love to design a playground sometime.

§ I’ve once again subscribed to a month of ChatGPT Plus. Initially, I was primarily excited to to try their new voice feature. It is indeed impressive. I might try to make use of their recently announced text-to-speech API for article narration.

The new image uploading and analysis feature is even more incredible. It can act as a completely passable art critic.

Finally, the custom GPTs feature may have provided the most tangible, real world LLM use-case to date. I uploaded my health insurance policy’s 100 page information booklet to the GPT’s backend knowledge-base, started up a new chat session, and then was able to ask the custom GPT to explain the charges on an unexpected medical bill I recently received. Is it dumb from a privacy standpoint to upload all of this medical information to ChatGPT? Unequivocally yes, but the utility is unmatched.

§ The way Matt Webb describes his approach to learning and making really resonates with me.

For the last decade or so, the way to bring new products into the world was to think carefully and make PowerPoint decks and cover the walls in post-its. No longer. The landscape of possibilities is unknown so the appropriate approach is to roll your sleeves up. Things-which-are-made teach you about the technology, open up new thoughts, and (vitally!) let you work with people who aren’t as close to the technology as you but probably have better ideas.

I’ve always found that the best ideas arrive when a group of people come together around a physical prototype. Abstract brainstorming sessions all too often breed passionate opinionated disagreements while prototypes inspire solutions.

§ It is a weird new feeling, being proud of my state like this. I have to hand it to Ohio though because time and time and time again recently the state defied my pessimistic expectations in the polls. Great job!

§ I know last week I said something silly like the daylight savings time change will encourage me to “embrace the night”. In actuality I’ve been going to sleep earlier than ever but waking up early enough to watch the sunrise. It has been nice.

§ Links

-

§ I spent Tuesday evening pulling up the remainder of my vegetables in advance of an overnight freeze.

The tomatillos were the biggest surprise. The matured late so I was left with a harvest of just under four pounds—a fraction of what have been getting the past few years. Over the weekend I used those, a basketful of poblanos, and some Hungarian peppers to make chili verde. A delicious autumn meal.

Although the San Marzano gave me a lot of blossom end rot trouble early in the season, it ultimately became one of my new favorite plants. I’ll certainly be growing more next year.

I’m hopeful for a longer, more plentiful growing season next year.

§ I planted six different varieties of garlic for a spring harvest. I can’t wait to see which one fares best.

§ We had the first snowfall of the year overnight Tuesday—very early! October 31st, for future reference.

§ Leaf blowing takes more skill than I appreciated. Raking is almost certainly more efficient at this point. The best I can manage with the leaf blower is to transport the leaves to other, equally inconvenient, areas of my yard.

§ During some of the warmer evenings this week I’ve been working on winterizing the backyard greenhouse. Our plan is to keep our quails there for the winter instead of moving them into the connected garage like last year. The best practice, as far as I can tell, is to use bubblewrap as a extra air barrier. I am looking into different options to provide active heating too.

§ As I write this, daylight saving time will have just started (or ended? I can never remember) which means, in practice, it will start getting dark at like 5:30 rather than 6:30. I used to get pretty upset about this but the truth is that there are just fewer hours of daylight at this time of year and there is no great solution to that other than moving somewhere more equatorial.

This year, I will embrace the night.

§ My big exhibit debut was a huge success. It has been an wild few months and this past week was a particularly big push to the finish line. Seeing it all come together I am, in one sense, exhausted. More than that, though, I am ready to do it again.

subscribe via RSS